Sound Design

Moths

I was the sound designer and mixer for this new short film written and directed by Pema Baldwin. I also recorded all of the live instrumentation performed by cellist Elizabeth Kate Hall-Keough. As part of the design process, I manipulated parts of her performance with effects and plug-ins to generate ambient tones and motifs that complement the dreamy yet desolate landscape inhabited by the father and son duo at the heart of the story. This combination of live and edited sound is a key part of my practice.

Head to my Video page to hear more examples of this in “Hear With You,” a film for which I designed an original score using improvised recordings from the contemporary music ensemble AREPO.

The Call of Spring

I created this video while living in Chicago, and while this project certainly has an emphasis on cinematography and video editing, I believe it is just as important to showcase its audio elements. I wanted work with nostalgia as my canvas, fusing and dreamifying seasonally-based sounds with musical elements to push and pull at this sensation.

Christina’s World

A soundscape I created as an interpretation of Andrew Wyeth's incredible painting "Christina's World." I have always been a massive fan of this painting and its fleetingly open, desolate landscape has always evoked certain sounds in my mind. This sound-painting includes live guitar as well as ambient instrumentation that I composed in Logic. Sound effects are from free-to-use sources.

One Second Constraint Film

Here I scored one minute of an early abstract film using only one second of original audio. I decided to use Hans Richter’s “Rhythmus 21” from 1921, and for the audio I recorded myself yawning. Even though I was very limited with my audio choice, the opportunities to edit and distort the file were endless. I also decided to add another challenge and try and complement the grainy, analog quality of the original film.

You’ll find the original second of audio at the end in case you are interested.

Bathroom Soundscape

Simply put, this audio-visual piece is a reimagining of my bathroom. Big stuff, I know! Can you tell this was during lock-down?

At the heart of this piece is the soundscape: everything that you hear was recorded in my bathroom on a Zoom H6, and these sounds were later edited and sequenced together. I wanted a simple visual in-line with the atmosphere of the soundscape, so I experimented with the human body. I wanted viewers to lose track of its form as they did the same with the audio.

Podcasts

During my year with Northwestern’s SAI program, I worked for Headquarters Records outside of class, a new operation that had been recently founded by Ryan Brady, former VP of Marketing at Atlantic Records and an NU alumnus. Through my work for Ryan, I was introduced to his friend and collaborator Paul Kaminski, with whom he hosted a podcast called “Now Hear This.” Simple premise: each of the hosts would take turns presenting an album and explaining why it is worth checking out to the other host. Paul also runs a podcast with his brother about Jack White called “The Third Men Podcast.” I went on to do sound mixing and editing for both of these shows.

For “The Third Men Podcast,” check out “Dodge and Burn: Analysis & Review Vol. 1” and “Sound and Color feat. Coppersound Pedals” to hear examples of my work.

After Ryan’s passeing, Paul continued “Now Hear This” in his memory, sifting through Ryan’s list of favorite albums and seeking to learn why Ryan wanted the world to hear them. Paul asked me to come on the show to discuss Peter Gabriel’s third self-titled album. I quickly agreed to co-host and edit the episode. Check it out here: “Peter Gabriel 3 (Melt).”

I also edited an episode of “Yesterday and Today,” a show about The Beatles hosted by Wayne Kaminski, Paul’s father. You can find that episode here: “McCartney: Anatomy of a Trilogy feat. Luther Russell.”

Most recently, I mixed three episodes of the podcast “Dude Better,” a show centered on having difficult conversations as a path to better wellness, hosted by childhood friends Alan Baxter and Andrew M. Cohler. I also wrote the theme music and transitional musical phrases.

My own podcast episode…

For the final project of my “The Art of the Podcast” class, I made a short podcast episode of my own, intended to be a proof-of-concept instead of a fully-fleshed hour-long piece. I wanted to explore personal concepts of introversion and loneliness and along the way I composed the music myself (except for the wonderful David Sylvian clip at the beginning), went on long walks, and interviewed a couple of my friends.

Listen to this project using the link on the right.

Remixing Hollywood

Hereditary (dir. Ari Aster)

CW: Gore, disturbing images, nudity, and loud noises.

Welcome to the final project for my graduate level "Film Sound 1: Narrative” course. I decided to tackle the last fifteen minutes or so of Ari Aster's "Hereditary," stripping all of the audio and building it from the ground up using foley, my own voice and music, and a couple of royalty-free sounds (for example, I wasn’t allowed to shatter glass in the campus studio). Next time!

Enter the Void (dir. Gaspar Noé)

CW: Blood and gore.

For this assignment, I stripped all the audio from this segment of Gaspar Noé’s "Enter the Void" and built it from scratch using my orginal music composed in Logic Pro X and a free sound effects.

My own software instrument

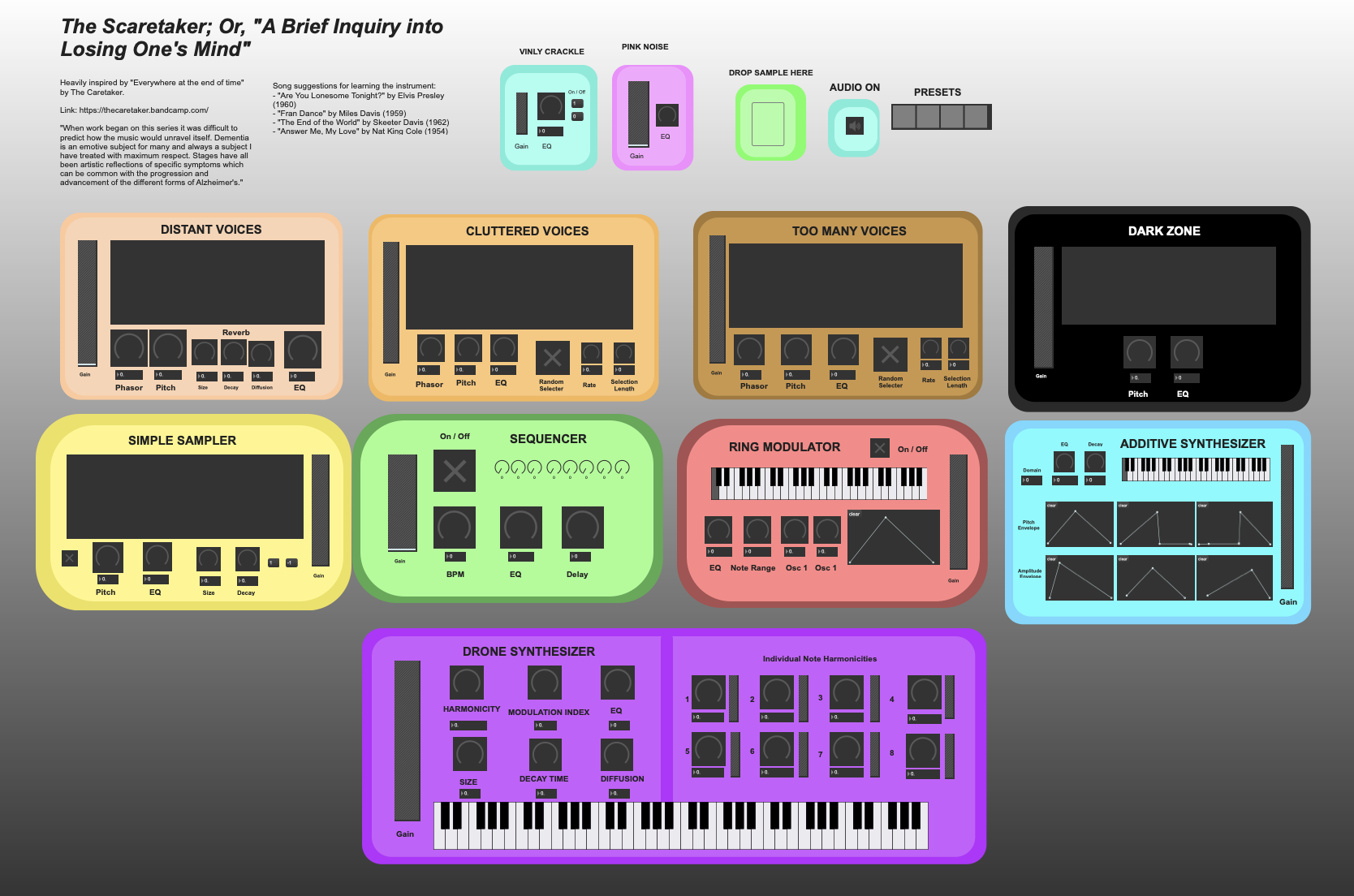

So, uh… what am I looking at?

I designed this software instrument using the program Max and it takes heavy inspiration from “Everywhere at the end of time” by The Caretaker. Quoted from that album’s description: “When work began on this series it was difficult to predict how the music would unravel itself. Dementia is an emotive subject for many and always a subject I have treated with maximum respect. Stages have all been artistic reflections of specific symptoms which can be common with the progression and advancement of the different forms of Alzheimer’s.” The album does this incredibly well and I wanted to try and mimic that artistic feat as much as possible (and also make it sound even scarier).

My goal for this project was to design a program that can receive a song and inject into it the spacey atmosphere and haunting vibe of The Caretaker’s album (I recommend quickly perusing the aforementioned album so you can understand the style I am describing). The above screenshot shows several different interfaces that each have their own creative applications. All can be used simultaneously with a single audio file (song) that is dropped into the green input object at the top.

The “Simple Sampler,” “Distant Voices,” “Cluttered Voices,” “Too Many Voices",” and “Dark Zone” units are the audio samplers that let you pick chunks of the song to loop, each having increasingly more intense, distorted, and ultimately terrifying results; the sequencer creates repetitive note sequences on top of the samples to add a bit of musicality if desire; the pink noise generator adds texture to the soundscape; the Drone Synthesizer at the bottom allows you to create reverberant, droning textures and adjust the amount of harmonics of all the notes; finally, the ring modulator and additive synthesizers are additional musical devices that, while not incorporating the song file directly, can add rich textures to the performance.

Hear a real-time performance using this instrument below:

My own drone machine

Desktop… what?

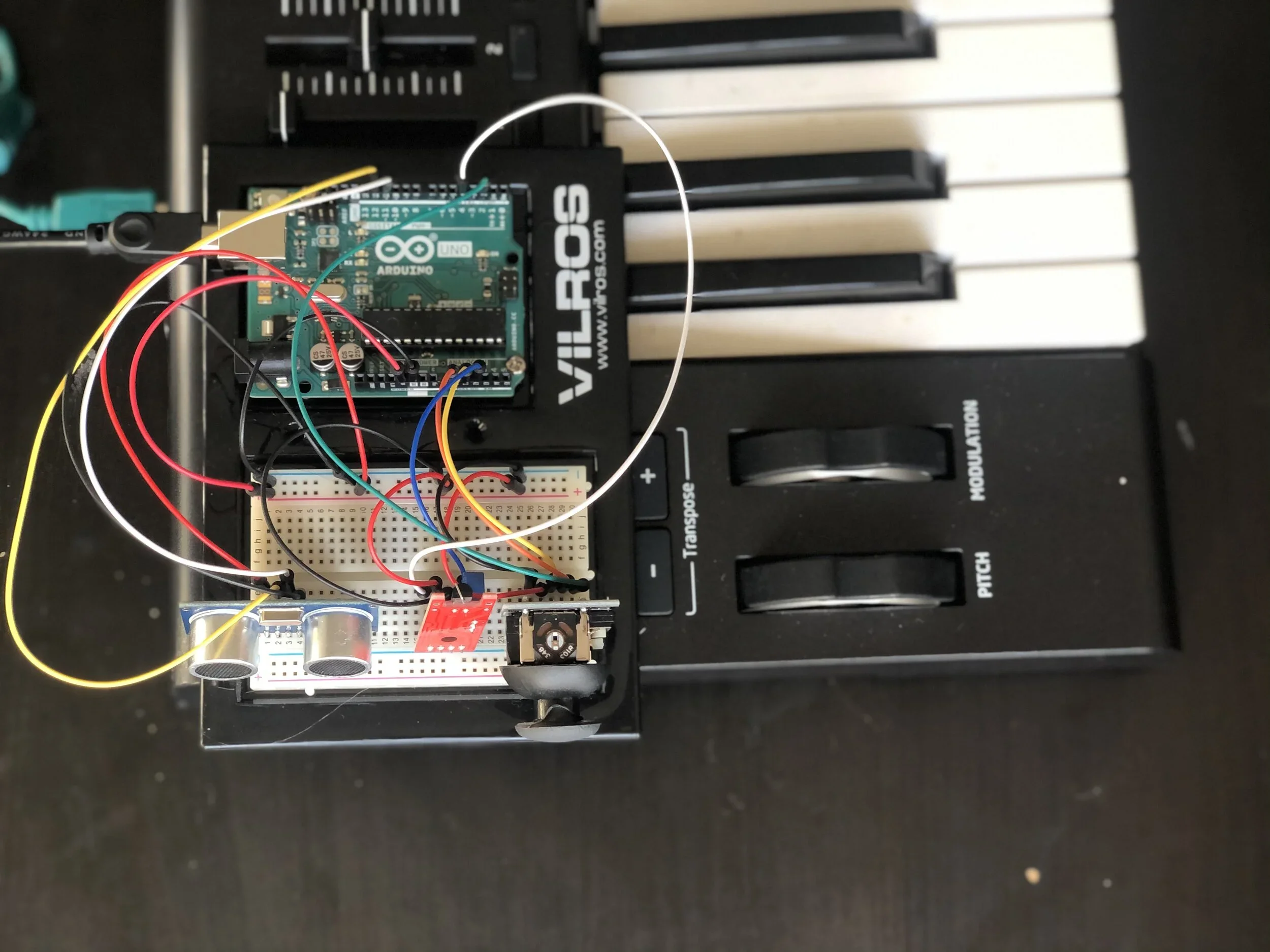

The Desktop Droner was my final project for Stephan Moore’s “Digital Musical Instrument Design” class during the final quarter of my MA in Sound Arts and Industries. We spent the first five weeks or so learning how to use the program Max, and after a week or two exploring Arduino, breadboards, code, and a plethora of sensors, I realized that I wanted to create an instrument that could turn any MIDI device into a drone-like synthesizer. I had heard the album “Diptych” by GROWING at the very end of April, and that really kicked started the idea in my head. Finally, after hearing Éliane Radigue’s ‘Trilogie De La Mor” for the first time (thanks Stephan), I officially knew I needed to pursue this path. The music resonated with to a remarkable degree, and honestly it sounded like too much fun to mess around with these sorts of sound aesthetics.

With the limited few weeks I had to sit down and build this project out - as I am no computer scientist and needed the first half of our course to brush up on my previous coding experiences and also learn a new program - I decided I would create something that worked as an attachment or extension of any regular MIDI device. For aesthetic purposes (to keep everything more contained physically and stylistically) I originally wanted to use my 25-key AKAI device, but I did not have that with me at the time. I did have a 49-key Novation device, which worked just fine, just be to sure to ignore the octaves I don’t play in the demonstration video.

What can it do?

Since I am handling music’s more minimalist aesthetics, the device is most powerful in the textures and tones of the sounds it creates as opposed to its many creative abilities. With the MIDI device playing notes, The Desktop Droner can create and resolve large reverbs, adjust the global cutoff frequency of the notes being played, change the global harmonicity ratio (think FM synthesis) of those notes using any nearby object, and trigger pre-loaded samples to play in a loop; in the demonstration video you will hear a kick drum with a large reverb effect. Believe it or not, a lot of this can be triggered with a single hand as the other hand plays notes on the MIDI device. This very simple device could be played by two people, and would most likely benefit artistically from the freedoms such a performance would allow each player.

Video demonstration:

In the video above, I set a pedal tone using my Max Patch (code below). Off of this I then build some simple chords using the MIDI device, in this piece mostly alternations between C, Em, and Em7. Towards the beginning, I am demonstrating the reverb and EQ controls with my left hand. Connected to my Arduino device is a Joystick Module, which is constantly giving out data regarding its X and Y orientation. In this case, an increase along its Y-axis increased the reverb (specifically the decay time), while an increase or decrease along the X-axis adjusted the cutoff frequency (either brightening or muffling the tone of the drone).

Afterwards, I hold down my fingers on a Touch Sensor and a kick drum begins to play with a large reverb effect on it. When I hold my fingers to this sensor, it reads out an alternating pattern of 1s and 0s. I fed this data into a part of my patch intended to playback audio samples, and it creates a loop. The sampler needs a 1 to begin the loop, and even though the 1s and 0s are coming in quite fast, the program waits until the full sample has played before looping. Ultimately, a loop is created at the rate of the sample we have chosen, which is what I wanted for the artistic purposes of this instrument (I am not looking to speed up, slow down, or chop samples alongside my musical textures).

Lastly, you’ll see me moving an external hard drive on my desk, adjusting the overall harmonics of the drones. Here, an Ultrasonic Distance Sensor is reading out information from the Arduino. As I move the external hard drive (or any household object you have nearby) towards the sensor, the sounds begin to globally descended in pitch. I find listening to this effect to be quite similar to that moment when you feel your body falling asleep when you’re in bed. Like a falling sensation… If the object moves away, the pitch ascends. In this piece I am playing, “The Day Everyone Was Late,” I use this ability in the bridge of the piece. I switch from a C note pedal tone to a G, and adjust the harmonic ratio to twice what it was before. So, instead of just changing chords in the bridge, this device allows a transition in the entire harmonic relationship of the piece. Even if I played the same chords, it would feel like have gone somewhere completely new (which a bridge is intended to do for the most part). This greatly enhances the effect of returning to the main refrain of the song: once I adjust the harmonicity ratio back to 1 and return to the C, Em, Em7 pattern I was playing in the beginning, the sense of home is extra strong.